There's no such thing as too many backups. An example.

A guy I know (lets call him user C) amused himself by playing with an incremental backup scheme based entirely on tar:

|

#!/bin/bash

|

|

# Based on ideas of Alessandro "AkiRoss" Re

|

|

#http://blog.ale-re.net/2011/06/incremental-backups-with-gnu-tar-cron.html

|

|

|

|

MONTH=$(date +%Y%m)

|

|

DAY=$(date +%Y%m%d)

|

|

BCKDIR=/bam/backup

|

|

ARCDIR=/bam/backup/archives

|

|

SRCDIR=/bam/backup/snapshots/deepgreen

|

|

|

|

TARGETFILE="$ARCDIR/nb_snapshot_$DAY.tar.zz"

|

|

LOGFILE="$ARCDIR/nb_snapshot_$MONTH.snar"

|

|

EXCLUDEFILE="$BCKDIR/nb_socketlist"

|

|

|

|

tar -c -X $EXCLUDEFILE -g $LOGFILE -f - $SRCDIR | pigz --fast --rsyncable --zlib > $TARGETFILE

|

The script worked well, and the backups kept piling up. User C realized that he needed a mechanism to restrict the time span over which backups are kept, and he thus added the following line to the end of the script to implement this mechanism:

find $ARCDIR -type f ! -newermt "1 month ago" -print0 | xargs -0 rm -f

Well, actually ... the line user C really used was ... hmmm ... slightly different. Instead of referring to' $ARCDIR', he addressed '.'.

Ooops.

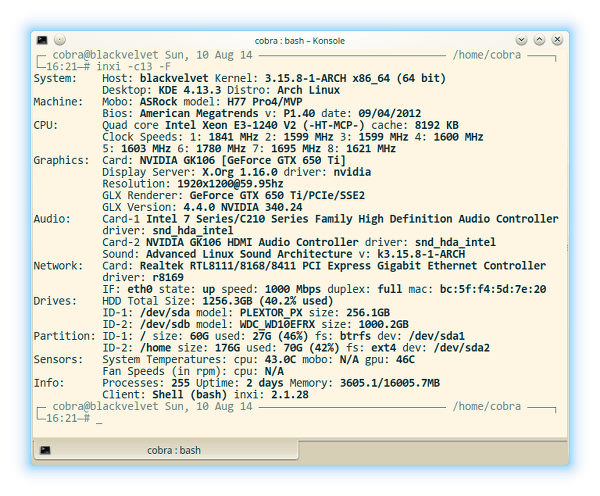

User C is of course my own dumb self. But dumb or not, my backup concept has worked: I had a backup taken minutes before the unintentional purge of my entire home directory, and was able to resume normal operation within the hour. This hourly incremental backup resides on an internal disk different from the one holding my system and home partitions, and is synchronized every night with my NAS.

Now, let's get technical. How do I ensure that I have a backup when I need it?

That's really very simple: I use software which makes the task to create backups and to restore them as foolproof as possible. And that means:

(i) The backup software is command-line oriented and thus fits seamlessly into a cron- and script-based infrastructure, making automation of the whole process a breeze.

(ii) The backup software's configuration is script-based and thus transparent and straightforward to revise, keep, and document.

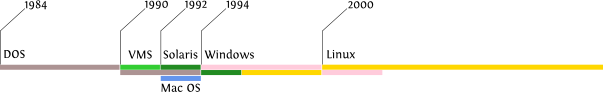

For several years now, I've used rsnapshot at home and rdiff-backup at the office. To save the home directories of my notebooks, I transfered them to my desktop using plain rsync. The above tar script then created an incremental backup also for these directories. That's a very heterogeneous and altogether outdated solution, and although it has served me well for years, I've recently decided to give my backup scheme a complete overhaul. In particular, I wanted one solution covering all my use cases. To my delight, I've discovered that a number of new backup programs for Linux are actively developed at present, some of which boast features such as global deduplication, efficient compression, and optional GPG encryption.

Here's a (certainly incomplete) list of contenders I will examine in the near future:

attic

backshift

burp

hashbackup

obnam

zbackup

In any case, when the day ends, and all hourly backups have been done, I sync them to the NAS:

|

#!/bin/bash

|

|

|

|

rsync -az /bam/backup/ cobra@thecus::snapshot_blackvelvet

|

I know, I know: if the house burns down, I'm going to lose this replica of my backup as well. Gimme a 100 Mbit/s upload. Then we'll talk.