I receive a constant flow of applications for PhD student and postdoc positions. A quick check of my trash folder in kmail reveals that the number of applications over the past 4 month is at least 27. Including the 3 I didn't trash, this number means that I receive about 90 applications per year.

Fortunately, I don't have to spent much time (<10 s) with most of them. Over the years, I established the following policy:

(i) If the application is not send to me, but to unspecified recipients: trash it.

(ii) If the cover letter starts with 'Dear Professor' without explicitly referring to a specific person, or with 'Dear Professor Paul Drude' and thus referring to the wrong guy, or with 'Dear Professor Braudd' or any other sad imitation of my actual name: trash it.

(iii) If the cover letter has no reference to where I work, or what I do: trash it.

(iv) If the cover letter contains several grammatical or typographical errors: trash it.

If the applicant has come that far, I will at least send a brief mail informing him or her that we don't have a position available at the moment. In the unlikely case that we are indeed looking for somebody at this very moment, I'll have a quick (!) look at the attached résumé or curriculum vitae. What I see then is often pathetic — poorly formatted Word files with more grammatical errors and typos than you'd expect even from a mediocre high school student — and rarely informative: Chinese applicants frequently refer to their role as 'model student of the year' and their perfect command of the English language, while their Indian counterparts seem to view their enthusiasm for cricket as essential information.

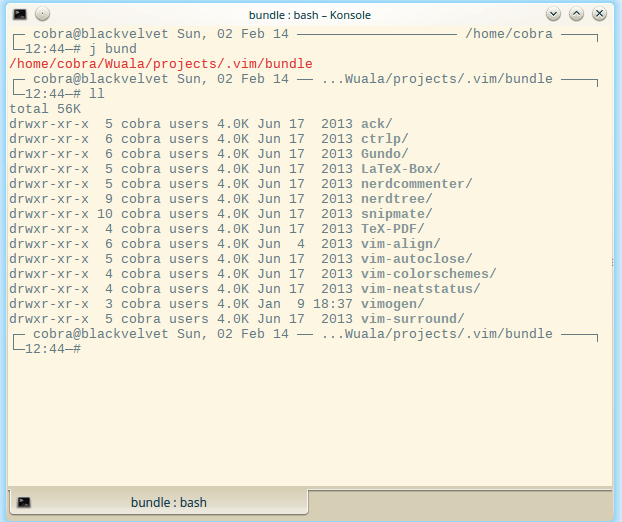

In some cases, however, I'm pleased by what I see. An astonishingly high number of these outstanding cases are prepared by applicants in German-speaking countries using the 'moderncv' class of LaTeX. This choice not only guarantees a well-structured and aesthetically pleasing résumé, but is also certain to register as a proof that the applicant has no problem dealing with LaTeX (which is definitely a plus in the natural sciences).

The disadvantage is that applications based on moderncv in its default configuration all look the same, and lack any obvious individual characteristics. For me, that's such a serious drawback that I do not use the moderncv class for my own résumé but instead still the one I've improvised 25 years ago based on the article style and doing all formatting with the tabbing environment. Now, I'm not looking for a job, but I still need to attach my résumé to project applications, and at times I'm musing about how nice it would be to start from scratch and base my résumé on a more modern foundation.

Well, at a lazy afternoon I searched the net and came across several interesting templates for a résumé or curriculum vitae. Technically, the template provided by Adrien Friggeri is very attractive: it employs TikZ for the header, XeTeX and fontspec to use an arbitrary otf font, and biblatex/biber to automatically print selected sets of publications. The design is on the edge of being extroverted, but that's easy to change.

I'm currently playing a little with this template, and I'm really enjoying it. In particular, it never fails to impress me how much we can compress information in the digital age. We can put a world into a single link, and I wonder why I don't see that more often in applications from young (and thus 'digitally native') researchers.

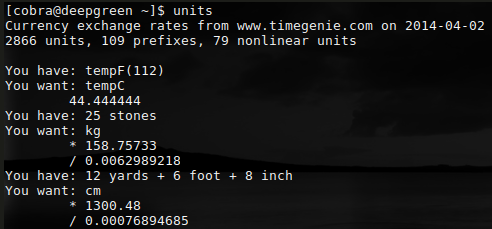

Here's one example:

All of the lines following 'about' are active links when viewed in a pdf reader. They show my identity as researcher from different perspectives, but foremost, they list my publications and citations. What could be more important?

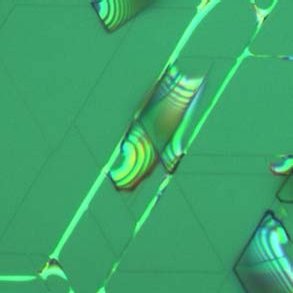

Here's another:

Only one link, but an important one. And certainly, I would treat an application offering explicit values of the publication indices very favorably. 😉

What are these indices? Well, they are more or less smart attempts to compress the 'value' of a certain scientist's research into a single number. The most popular and prominent one is the h index, which is criticized for several reasons, among them the fact that it can be obtained from the number of citations (in my case, the agreement is almost perfect). The g index does not have this weakness, but is more difficult to estimate (I use a script for that). Finally, the i10 index has been created by Google in the attempt to promote its Google scholar service. It's determination is trivial and essentially serves to distinguish wheat and chaff.

None of these indices is free from weaknesses, and none of them alone provides a reliable impression on a researcher's performance. Taken together, however, these indices can reveal much.

As an example, let's compare my indices to those of a valued colleague of about the same age: Steven C. Erwin. At the time of writing, his h, g and i10 indices are 37, 79, and 77, respectively. Evidently, the first two of these indices are close to mine, but his i10 index is drastically lower. Hey...that looks good for me, right?

It doesn't. Nearly equal g, but vastly differing i10 indices simply signify that the guy with the lower value for the latter (i.e., Steve) is more efficient: he publishes less trash which is immediately forgotten.

If I would have to chose between Steve and me, I would take him. Fortunately, he's a theorist, and thus not of much use for anything. 😄