We now know that the tragic story of Mat Honan was made possible by a major blunder committed by Amazon and, foremost, Apple. Does that mean that Mat is the innocent victim, and Apple is to blame? Is it all Apple's 'fault'?

Technically? Come on. Morally? Yes, of course. But that's really nothing new. People with an IT background are certainly not surprised about any security shortfalls of Apple. On the contrary. Mac OS X, for example, is generally agreed upon to be the most vulnerable consumer operating system.

So why do people buy Apple's products? What's the reason for Apple's phenomenal success, and their almost hypnotic influence on the general public and the mass media?

Meet Clarke's third law: "Any sufficiently advanced technology is indistinguishable from magic." Nobody has lived this statement more than Steve Jobs, Apple's chief-magician who single-handedly succeeded to turn Apple from a computer producer into a factory of lifestyle gadgets with a must-have factor.

The key to this transformation is the element of magic, or better to say its celebration, in Apple's presentation of their products even if the technical background is as mundane as it can be. Take Apple's recent television commercial as illustration. A photograph of an ugly boy made by a girl with an iPhone is seen a moment after on the girl's iPad. Most people are simply entranced by this little sketch, many are delighted, and only a very few complicate things by asking how its actually done. I've interviewed a couple of hard-core Apple users, and none of them even had a clue. Nor did they care, as if it wouldn't matter.

But it really does, and that's the point at where we are back to Mat's participation in his own little tragedy. Consider, for illustration, the typical situation at home where several devices and appliances are connected to each other by a network. Needless to say, a wireless connection is generally much more convenient, and for some devices essential to function as intended (think of a tablet with a network cable). But this convenience has to be earned: wireless traffic must be encrypted, and better with algorithms judged to be secure. The consequences of ignorance can be severe.

This simple example demonstrates an immutable law of system administration: the easier for the users, the harder for the administrator. Qualified decisions about the systems' security while potentially opening them to access from the outside requires an understanding of the available counter-measurements and their deployment.

Apple tries to reduce the impact of this fact by cutting configuration options. They simply hope that the user, giving only option A or B, cannot do anything which will endanger the integrity of the system. To the user, they present the system as infallible. Everything is "direct", "simple", "magic", etc., nothing requires thought or consideration. Complexity or complications are unknown and unheard of.

This tendency of oversimplification and toyification is a general one and goes much beyond Apple. It represents a genuine paradigm shift. "Computer" in 2012 means consumption, not creation. Whether you have an Android or an iOS smartphone, a Windows 8 tablet or an iPad, or a Mac Book Air: you are basically positioned to consume media created by others. That's what these devices are made for, and the software is optimized for. Which, of course, does not change physical limitations, and the fact that a full HD movie looks better on a 50" plasma screen than on the retina display of the iPad 3. Basically, you are in the position of a kid getting a sneak-peek of a book from the public library. From the position of the kid, that's quite exciting.

Of course, the same paradigm shift is evident in the interwebs. Everything is social and cloudy. Sascha Lobo, Germany's most hated blogger, has recently provided a surprisingly intelligent (really) assessment of the current situation: "Your internet is only borrowed". I'm quoting literally.

"Daten auf sozialen Netzwerken müssen unter allen Umständen so behandelt werden, als könnten sie jederzeit verloren gehen. Denn sie können jederzeit verloren gehen. Trotzdem scheint die Welt likebegeistert anders zu handeln: All ihr digitales Schaffen findet im geborgten Internet statt. [...] Dabei kann man auf einem Blog machen, was man möchte. Ärgerlicherweise bedeutet das auch, dass man machen muss, was man möchte. Und dauernd möchten zu müssen ist recht energieaufwendig."

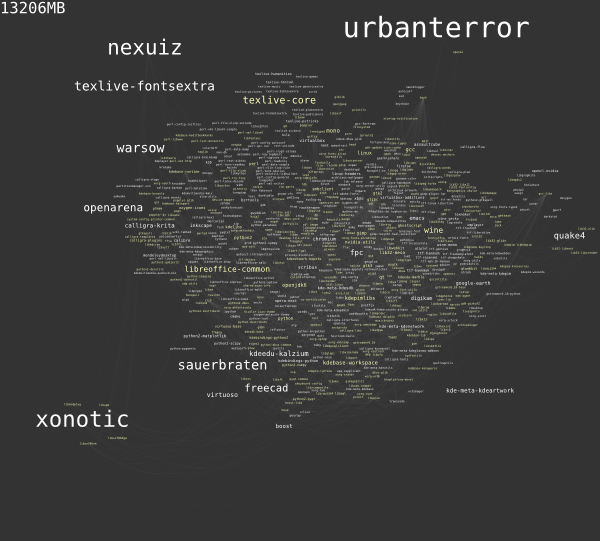

The experience of Mat Honan is more than a funny episode. It's a symptom. This blog entry will not change that, but marks the end of an era, and the start of a new one. I don't care much for the taste of the masses, and will just continue as before: with ArchLinux on my desktop and a Debian-powered server for social tendencies owned by me and friends.

Berlin, 35°C, signing off. 😉