I really didn't expect that, but my recent post about our new server attracted more questions than all posts in 2016 combined. I thought that people interested in such old-school IT issues would be essentially extinct, but apparently a few still exist.

In what follows, I try to provide some answers. I've grouped the questions such that they revolve around the same topic even if they were not asked by the same person. My answers are all short except for the last one, where I elaborate on server security.

Can every 'ordinary' citizen rent such a server? Or do we need a trade license (Gewerbeschein)? Do we need special certificates?

No, anyone can rent a server. Technically, however, it is not advisable to run a server without some basic knowledge of system and network administration. I come to that point in more detail below.

What can I do with my own server? What benefit does it offer?

You can do anything you can imagine to do with a computer. And what's most important: it's yours, and no Google/Facebook/Dropbox will suddenly discontinue the service to “optimize the user experience”. And if your hoster goes bankrupt, just move to the next one—you can always rent another server (unless the government decides to ban private servers to “fight cyberterrorism” or whatever else is en vogue).

With your own server, you could, for example, host your blog, as I do. You could run a mail server, set up your own cloud, provide groupware for yourself and your family, or use it as game server and communicate via IRC, as we do at pdes-net.org. You could also install a Jabber server supporting OMEMO to provide a Skype and Whatsapp replacement for your family and friends that is guaranteed to be safe from eavesdropping.

What the heck is this Jessie and Stretch thing? Do I need to know that?

Perhaps not. But I'm not sure. If you don't know, I imagine that you are not, in all likelihood, very familiar with GNU/Linux. And that's not the ideal basis for administering your own server. Well, you could rent a Windows server, of course. I have no idea for what reason, though.

Can we rent a server anonymously?

Yes, for example here. Note: in case you want to register a domain (and who doesn't?), you want to do that anonymously as well. Otherwise your identity is revealed by a simple whois request (check, for example, 'whois pdes-net.org').

How do you connect to the server? Can I use it also by ftp? Or by the Windows Explorer? What about smartphones?

One has to distinguish between the interface used to administer the server from the services provided by it. Regarding the former, I connect exclusively via ssh (or better to say, via mosh, an ssh replacement). I also use this way to copy files via the ssh-based tools scp, sftp and sshfs. You can get ssh clients also for Android and iOS, and you can thus administer your server from anywhere you like. Concerning the latter, you can use any protocol for which you have configured the corresponding service—in this context the smb server to allow access to the user's files via the Windows Explorer. However, I would definitely not recommend that. Rather, I'd use winscp or implement user access to files by webdav over https.

Can hackers attack the server?

Whatever you call them: there will be plenty of people trying to get access to your server. For example, in the three days in which our new server was running in its default configuration, 'lastb' revealed 6742 login attempts via ssh. Fortunately, our hoster had set a passphrase that was definitely better than the most popular one.

What did you do to secure the server and to avoid hackers taking it over?

The measures I usually take are all very simple and do not require membership of the inner circles of server adminship. The core principle is to minimize exposure by scrutinizing the software base.

What do I mean with that statement? I can illustrate that best with an example from one of my Arch systems:

➜ ~ arch-audit

Package bzip2 is affected by ["CVE-2016-3189"]. Update to 1.0.6-6!

Package jasper is affected by ["CVE-2016-9591", "CVE-2016-8886"]. High risk!

Package libtiff is affected by ["CVE-2016-10095", "CVE-2015-7554"]. Critical risk!

Package openjpeg2 is affected by ["CVE-2016-9118", "CVE-2016-9117", "CVE-2016-9116", "CVE-2016-9115", "CVE-2016-9114", "CVE-2016-9113"]. High risk!

Package openssl is affected by ["CVE-2016-7055"]. Low risk!

As you see, libtiff is listed as critical, and the exploits partly date from 2015. Better to get rid of it, right? Sure, but:

➜ ~ whoneeds libtiff | tr -d '\n'

Packages that depend on [libtiff] aarchup artha auctex awoken-icons blueman chromium clipit conky conky-colors cups darktable djvulibre emacs emacs-minimap engrampa feh firefox galculator gimp gimp-webp gksu gnome-keyring gnome-themes-standard gnuplot gparted gpicview graphviz gsimplecal gst-libav gst-plugins-good gtk-engine-murrine gtk-engines gtk-theme-orion-dark gtk2-perl gtk3-print-backends guake gucharmap gvfs gvim hplip hsetroot inkscape keepassx2 kodi libbpg libcaca libreoffice-fresh lxappearance lxappearance-obconf lxinput lxrandr lxterminal lyx masterpdfeditor-qt5 mirage mpv mupdf mupdf-tools netpbm network-manager-applet nitrogen numix-circle-icon-theme-git obconf obkey obmenu-generator openbox openbox-themes orage owncloud-client pavucontrol pcmanfm portfolio povray pstoedit pstotext pychess python-matplotlib python-pillow python-scikit-image python-seaborn qpdfview rawtherapee ricochet scribes scribus scrot seahorse spacefm spyder3 sqlitebrowser terminator texlive-bibtexextra texlive-core texlive-fontsextra texlive-formatsextra texlive-games texlive-genericextra texlive-htmlxml texlive-humanities texlive-latexextra texlive-music texlive-pictures texlive-plainextra texlive-pstricks texlive-publishers texlive-science tint2 tumbler vertex-themes vesta virtualbox volumeicon webkitgtk2 wxpython xfce4-notifyd xfce4-terminal yelp zenity zim

As you see, essentially everything depends on this package. No way to get rid of it on a desktop system! But surely that's no issue on a pure command-line system like our server, right?

$ aptitude why libtiff5

i webalizer Depends libgd3 (>= 2.1.0~alpha~)

i A libgd3 Depends libtiff5 (>= 4.0.3)

Ok, let's remove webalizer. But after that:

$ aptitude why libtiff5

i pinentry-gtk2 Depends libgtk2.0-0 (>= 2.14.0)

i A libgtk2.0-0 Depends libgdk-pixbuf2.0-0 (>= 2.22.0)

i A libgdk-pixbuf2.0-0 Depends libtiff5 (>= 4.0.3)

Who installs pinentry-gtk2 on a system without X server? WHO?

/usr/bin/apt-get --auto-remove purge libtiff5

Requested-By: cobra (1000)

Install: pinentry-curses:amd64 (1.0.0-1, automatic)

Purge: libcroco3:amd64 (0.6.11-2), libpangoft2-1.0-0:amd64 (1.40.3-3), libcups2:amd64 (2.2.1-4), libimlib2:amd64 (1.4.8-1), w3m-img:amd64 (0.5.3-34), libgtk2.0-bin:amd64 (2.24.31-1), libgdk-pixbuf2.0-0:amd64 (2.36.3-1), libpixman-1-0:amd64 (0.34.0-1), libsecret-1-0:amd64 (0.18.5-2), librsvg2-common:amd64 (2.40.16-1), gnome-icon-theme:amd64 (3.12.0-2), libavahi-common-data:amd64 (0.6.32-1), libgail-common:amd64 (2.24.31-1), libavahi-common3:amd64 (0.6.32-1), libgtk2.0-0:amd64 (2.24.31-1), libxcursor1:amd64 (1:1.1.14-1+b1), libthai-data:amd64 (0.1.26-1), libxcb-shm0:amd64 (1.12-1), libid3tag0:amd64 (0.15.1b-12), libsecret-common:amd64 (0.18.5-2), libgail18:amd64 (2.24.31-1), libxcb-render0:amd64 (1.12-1), fontconfig:amd64 (2.11.0-6.7), libtiff5:amd64 (4.0.7-5), libatk1.0-0:amd64 (2.22.0-1), libpangocairo-1.0-0:amd64 (1.40.3-3), librsvg2-2:amd64 (2.40.16-1), pinentry-gtk2:amd64 (1.0.0-1), libgif7:amd64 (5.1.4-0.4), hicolor-icon-theme:amd64 (0.15-1), libthai0:amd64 (0.1.26-1), libgdk-pixbuf2.0-common:amd64 (2.36.3-1), libgtk2.0-common:amd64 (2.24.31-1), libgraphite2-3:amd64 (1.3.9-3), libjbig0:amd64 (2.1-3.1), gtk-update-icon-cache:amd64 (3.22.6-1), libatk1.0-data:amd64 (2.22.0-1), libharfbuzz0b:amd64 (1.2.7-1+b1), libcairo2:amd64 (1.14.8-1), libavahi-client3:amd64 (0.6.32-1), libpango-1.0-0:amd64 (1.40.3-3), libjpeg62-turbo:amd64 (1:1.5.1-2), libdatrie1:amd64 (0.2.10-4)

That was an example illustrating what I meant with “scrutinizing the software base”. But let's proceed step by step and relive the few hours when I configured our new server.

I first tighten the security of sshd:

on the client:

➜ ~ ssh-keygen -t ed25519

➜ ~ ssh-copy-id -i ~/.ssh/id_ed25519.pub pdes-net.org

on the server:

su -

$ vim /etc/ssh/sshd_config

Port XYZ

PermitRootLogin no

ChallengeResponseAuthentication no

PasswordAuthentication no

$ systemctl restart sshd.service

XYZ has to be replaced with a sensible portnumber, of course. 😉

Relieved, I next check which services are running on the system to have an overview:

I then look for services that opened a port and listen on it. I prefer to use

for this purpose,1 but I usually also install 'iftop' and 'iptraf' to have a look at the traffic.

1 Note that you have to install the 'nettools' package on many distributions as 'netstat' is deprecated in favour of the 'ss' command of the iproute2 package since 2011. The 'netstat' output is much more compact and readable, though.

Obviously, it is kind of paradoxical to rely on a local check on a system which might have been already compromised. I thus also use 'nmap' to have a look from outside:

nmap -sS -sU -T4 -A -v pdes-net.org

The simple tests above reveal that the server was basically prepared to run an online shop and thus has plenty of services running. Apache, nginx, postfix, dovecot, mysqld, sshd, froxlor, etc. etc. I just stop and remove all of them all except sshd:

systemctl stop <service>

systemctl disable <service>

deinstall them:

After that, there are plenty of orphans that I remove with

Update:

Ugrade:

vim /etc/apt/sources.conf

:%s/jessie/stretch/g

ZZ

wajig daily-upgrade

wajig sys-upgrade

This last step may appear questionable, as Debian Testing (currently called Stretch) does not receive the same security support of Stable (currently called Jessie). Well, I definitely prefer Testing for its more up-to-date packages, and I think its more important to avoid packages from the contrib and non-free repositories.

I then check the support status of my installation:

debian-security-support

“...identify installed packages for which support has had to be limited or prematurely ended...”

Everything's supported. The more software you have installed, the less likely is this result.

And finally, I search for vulnerabilities (similar to arch-audit above):

debsecan

“...generates a list of vulnerabilities which affect a particular Debian installation...“

CVE-2016-2148 busybox (remotely exploitable, high urgency)

What is busybox doing here? Well, its gone.

Update: Damn, I forgot – it's needed for update-initramfs. No big deal, though: what you can remove that easily can as easily be installed again. So don't worry, you won't be able to accidentally remove the kernel or libc. 😉

After these nine steps (the nine hidden secrets for perfect server security!!!), the total size of our installation (disregarding user content in /home and in /var/www) is less than 1.2 GB.

What did I achieve so far? Well, first of all, I have stopped and removed all running services I do not need. That's certainly the most important contribution to server security as all of these services were remotely accessible. Second, I have upgraded the entire installation to a current version of the distribution in the belief that in this version, as a tendency, previous CVEs have already been recognized and fixed. Third, I have identified and removed remaining programs and libraries with security breaches rated as critical.

What can I do more? Can I rate the security of the system somehow, and monitor it?

Yes. Such a rating is, for example, offered by lynis, a security audit system by rkhunter author Michael Boelen, which provides a wealth of helpful information and advice out of the box without the need to configure anything. Great for beginners, useful for advanced users. Recommendable alone for its suggestions concerning the configuring of the ssh server. But beware and don't lock yourself out. 😉

With the current configuration, lynis gives pdes-net.org a hardening index of 78%. I'm quite satisfied with that score (you probably won't get a 100% as long as the server is still connected to a network).

How can I make sure that we keep that score? Well, lynis is really very helpful in that respect, since it suggests, depending on the distribution, the installation of several useful tools that help in future security-related decisions.

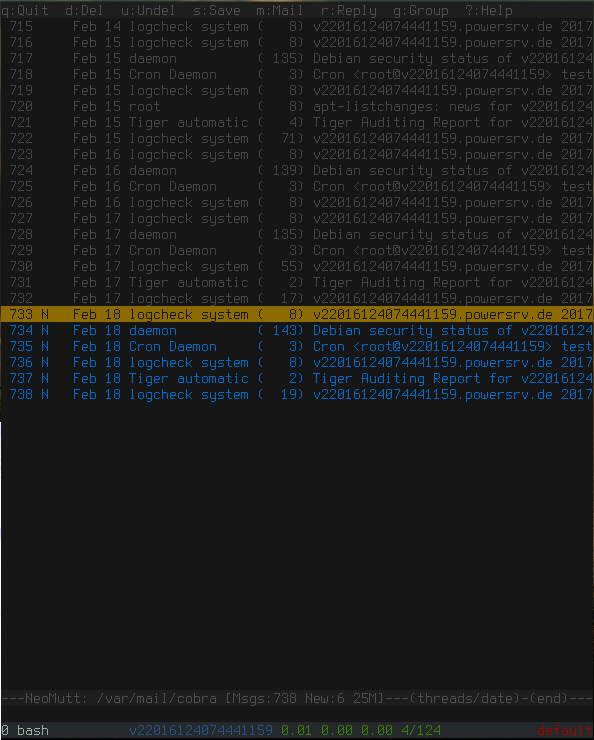

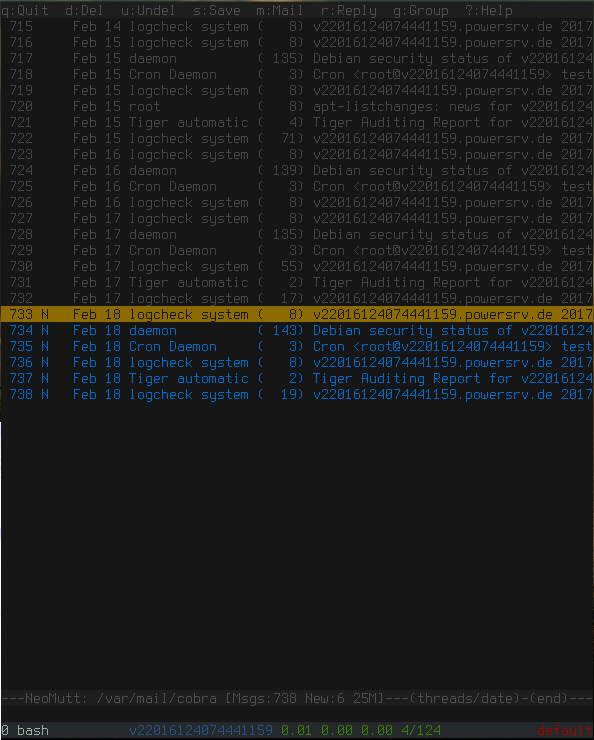

Many of these tools, however, work best when they are executed by a cronjob in the background and inform the administrator by local mail in case there's anything to report. For this reason, it is imperative for any Linux server installation to include a functional mail transfer agent (MTA) configured for local delivery. In Debian, I always chose exim because its so wonderfully easy to configure it for this case. I'm so much used to this genie on the system, telling me about the good and the bad, that I install an MTA not only on servers, but on every system I administer (although I usually prefer postfix over exim). Here's an example taken today from pdes-net.org:

When performing system updates on Debian, I additionally like to have the following tools as little helpers in the background. apt-listbugs “retrieves bug reports from the Debian Bug Tracking System and lists them”, apt-listchanges “compares a new version of a package with the one currently installed”, apt-show-versions “shows upgrade options within the specific distribution of the selected package”, checkrestart (part of debian-goodies) “helps to find and restart processes which are using old versions of upgraded files (such as libraries)”, and needrestart “checks which daemons need to be restarted after library upgrades”. I also like logcheck which “helps spot problems and security violations in your logfiles automatically and will send the results to you in e-mail” (see above).

These tools are helpful, but I like to go one step further and have an automated, daily security check. That's exactly what checksecurity does, which, according to Debian, performs “basic system security checks”. Well, how basic depends a lot on the packages installed in addition: recommended are, among others, tiger, which again refers to other packages such as chkrootkit, “searching the local system for signs that it is infected with a 'rootkit'”, as well as file monitoring systems such as tripwire and aide that just make little sense on a rolling-release system. This fact does not diminish the value of checksecurity, of course, which I would very much recommend to install.

You certainly have noticed that I so far did not even mention the security evergreen: the firewall. Well, I do recognize the value of an enterprise-class firewall for a corporate network, but here we are talking about a software running on the very system that we desire to protect. This scenario reminds us of the infamous 'personal firewalls' under Windows, the legendary discussions on nntp://de.comp.security.firewall and fefe's succinct summary:

Do Personal Firewalls improve security? — No.

Why do so many people install them, then? — Because those people are all idiots.

Well, nobody would judge the built-in firewall functionality of Linux equally harshly, and there are even one or two arguments in favor for using it. My view is that this built-in firewall is secondary compared to the measures discussed above, but it certainly doesn't hurt to use it. And that's what I do:

ufw default deny

ufw limit ssh

ufw allow http

ufw allow ...

One final word. Be careful not to overdo things. The more security-related stuff you install, the more messages you will get, and the more dramatic it will all sound. For example, chkrootkit identifies the mosh server instance running on udp port 60001 as an infection when running the bindshell test. That's a trivial false positive, but within the grip of security paranoia, it will be amplified such that it can unbalance even experienced administrators. Be calm, practice Zen, and acquire enough knowledge to immunize yourself against a fullblown security hysteria.